Lots of banks are establishing a multi-cloud technique to lower functional threat, follow regulative requirements, and likewise to take advantage of an option of cloud services and rates from several suppliers.

In this post, we share a facilities plan for multi-cloud information processing with a portable multi-cloud architecture. Utilizing a particular example, we demonstrate how the Databricks Lakehouse platform substantially streamlines the execution of such an architecture, making it simpler for companies to satisfy regulative requirements and lower functional expense and threat.

The UK Bank of England and the Prudential Guideline Authority (PRA) have actually just recently revealed brand-new policies for systemically crucial banking and monetary services markets (FSI). This needs them to have strategies in location for “Worried Exit” circumstances associating with services from 3rd parties, such as cloud IT suppliers:

These policies concentrate on making sure company connection throughout modifications to company, consisting of cloud services. There is an onus on the FSI companies to show how functional plans supporting vital services can be kept on the occasion that services are altered to a various service provider.

Although these policies are UK-specific, global banks and other FSI business will require to cater for these policies if they wish to do company in the UK. Likewise, there is a possibility that this kind of regulative design will be embraced in other jurisdictions in the future (comparable requirements are presently being talked about in other markets such as ASEAN)

International FSI business require to have a technique to future-proof their cloud architecture for:

- Regulative modifications needing a multi-cloud option in the future

- Functional threat of a cloud supplier altering its prices design or service brochure

- Functional threat of a cloud supplier choosing to withdraw from a geographical area

- Geo-political modification needing a relocation from cloud to on-premises in a specific geographical area.

There are 3 crucial requirements for accomplishing intra-cloud and hybrid-cloud (cloud to on-premises) mobility:

- An open, portable information format and data-management layer with a typical security design

- Structure application services (such as database and AI processing) that prevail in between clouds and can likewise be released on-premises

- Open application advancement coding requirements and APIs, enabling a single code-base to be shared in between cloud-vendor and on-premises platforms

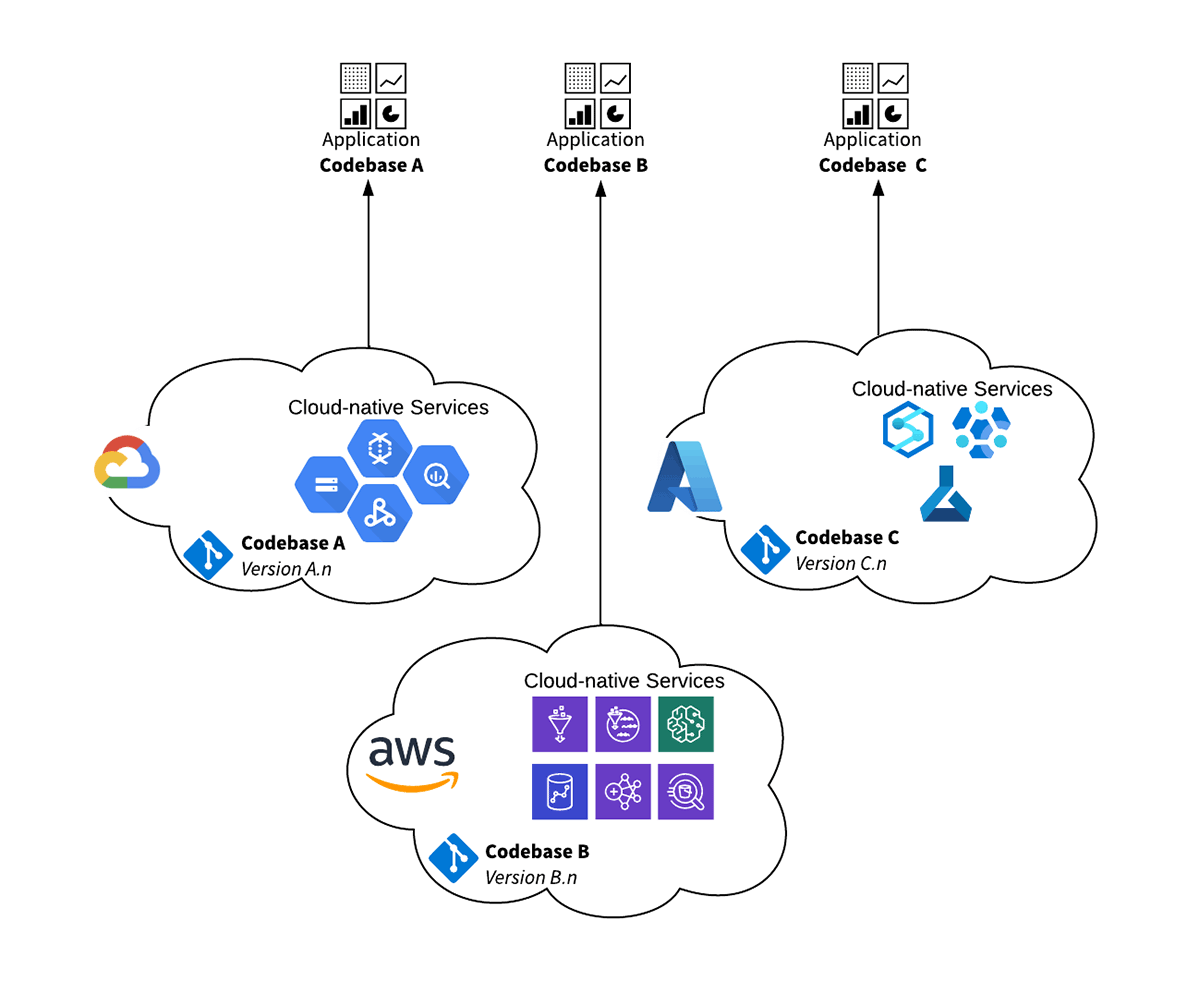

The Cloud Native Difficulty

A normal method for releasing information applications is to utilize cloud supplier native services. This was a typical pattern in the preliminary phases of cloud adoption, with companies choosing a favored cloud supplier for most of their work. Nevertheless, as business embrace a multi-cloud technique this produces a set of difficulties:

- Numerous codebases should be kept to run a single application versus several cloud-vendor native services

- Numerous cloud facilities develops should be kept to support the construct of the application in various cloud environments

- Numerous sets of cloud-service abilities should be curated in the company to guarantee the application can be supported in all environments

This method ends up being pricey to handle and preserve through application release cycles and likewise developing regulative, geo-political and supplier service modifications. The functional expense of preserving several codebases for various systems with a typical practical and non-functional requirement can end up being big. Guaranteeing comparable outcomes and precision in between codebases and likewise preserving several skill-sets and facilities builds are a big part of the overhead.

In addition to this, lots of companies wind up structure and running another comparable service to run in their own data-centers (on-premises) for classes of information that can not be saved and processed in a third-party cloud supplier. This is normally due to regional regulative or client-sensitive information requirements that specify to a geographical area.

We have actually just recently seen an example of this circumstance at a worldwide bank with a big existence in the UK. They have a requirement to run day-to-day Liquidity Danger computations on their main tactical cloud supplier platform. The consumer now has the difficulty of preserving comparable performance on a various cloud platform to please regulative requirements. The primary difficulties they deal with are the expense of preserving 2 various code bases along with accomplishing comparable efficiency and coordinating outcomes for both systems. With time the code base for the main application has actually been enhanced and diverged from the secondary system to the point where a significant job is needed to straighten them.

Plan For Multi-Cloud Data Processing

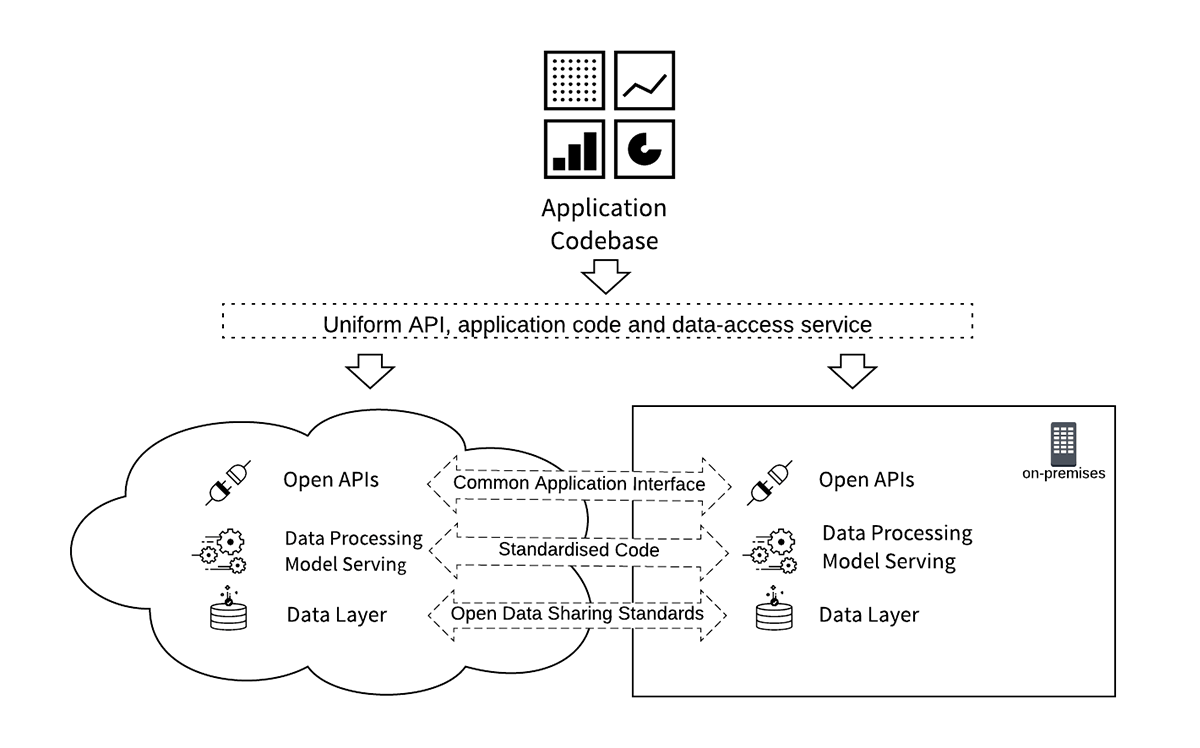

Here we provide a basic plan for business information processing that caters for a contemporary multi-cloud, multi-geography and multi-regulatory environment.

This is based upon the following structures:

- Open Data: information need to be saved in an open, portable format, with a typical gain access to and processing user interface for all clouds. This need to likewise be offered for vendor-independent on-premises implementations.

- Open API Standards: company applications access the information and information processing services utilizing non-proprietary standardized user interfaces and APIs. These need to be typically offered throughout several cloud platforms and on-premises implementations.

- Code Mobility: company reasoning code, ML/ AI designs and information synchronization services can be released in all the target cloud platforms and on-premises environments from a single code-branch without being re-factored.

Utilizing an information analytics architecture with APIs and information services that are standardized throughout various facilities platforms supplies an easier and more affordable option. Functional expense is considerably minimized by having a single codebase while still keeping control of localized physical applications to follow regulative requirements throughout several locations.

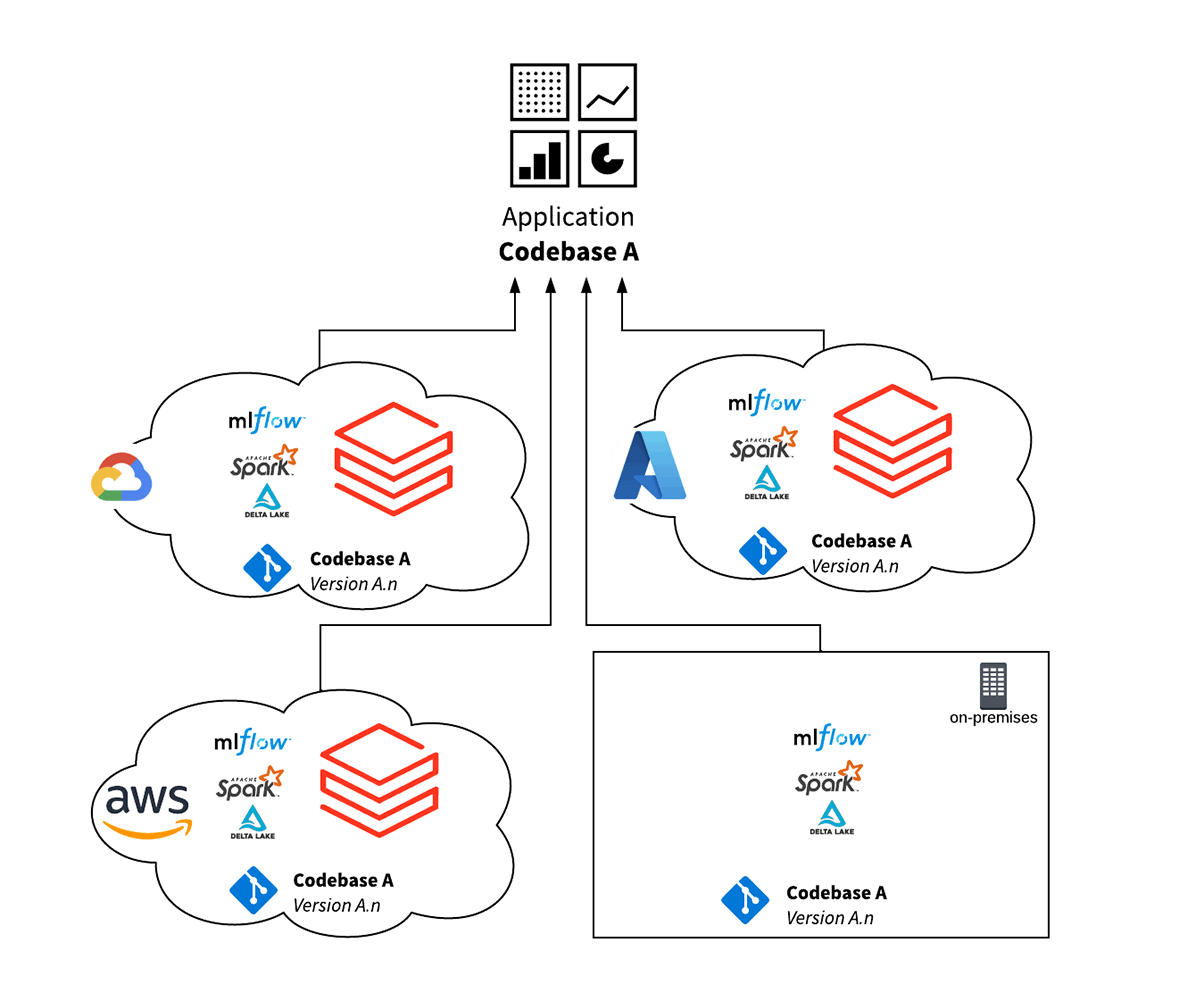

Databricks Option for Hybrid Cloud Data Processing

Databricks’ Lakehouse Platform streamlines multi-cloud implementations with its open requirements and open procedures method, as it is carefully lined up with the basic plan described above. Since the Lakehouse and its hidden open-source elements supply a typical practical user interface throughout all clouds, a single codebase can run versus several cloud supplier environments.

The functions and advantages of this style are:

- Delta Lake supplies a high efficiency open requirements information layer, enabling smooth information interoperability and mobility in between environments. This likewise streamlines information reconciliation and stability checks throughout environments.

- A single code base for several cloud facilities reduces the expense of operations and screening, allowing designers to concentrate on function advancement and code optimization, rather of keeping environments in-sync.

- The Databricks stack is based upon open-source non-proprietary requirements, which suggests that a broader skills-base is offered to establish and preserve the codebase. This lowers the expense of execution and operation.

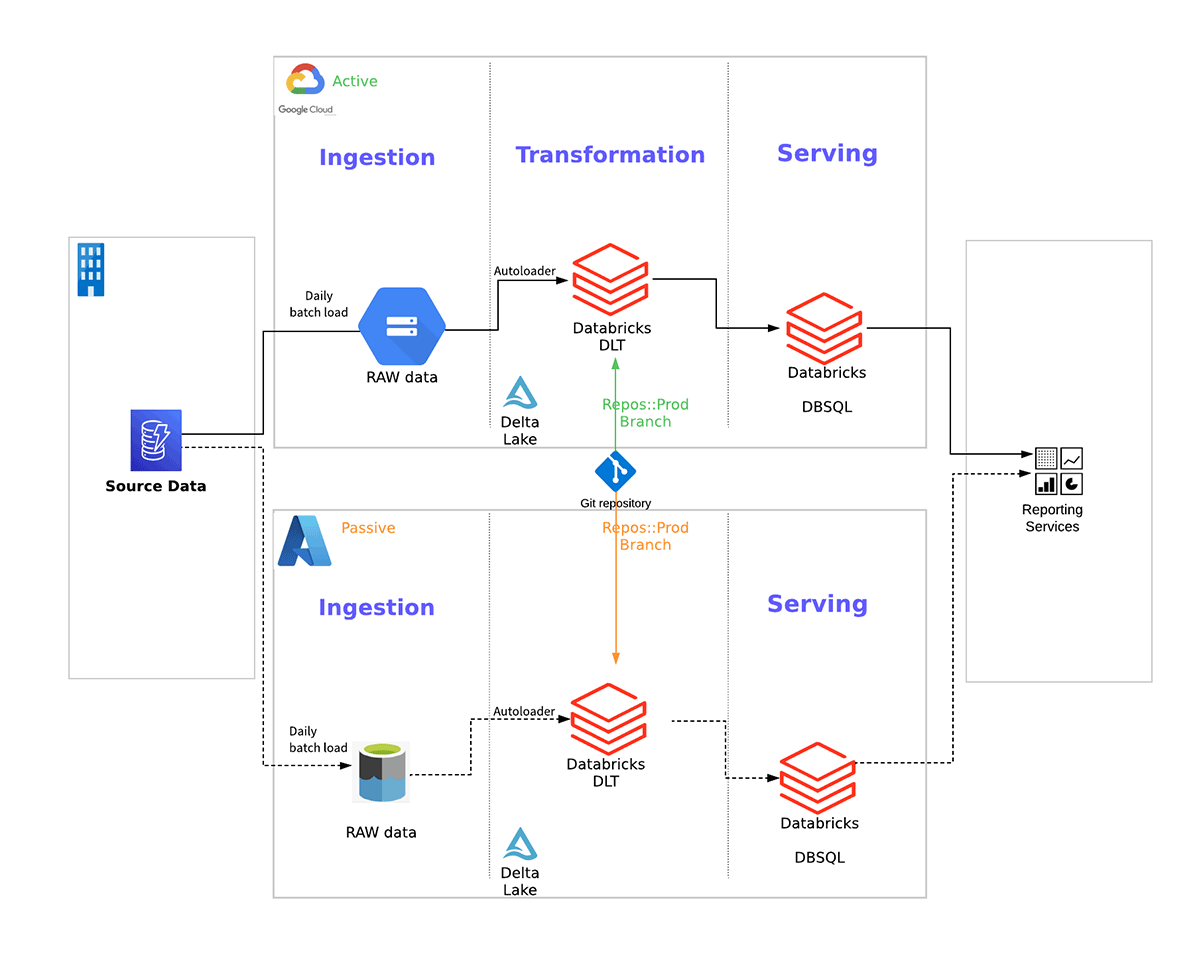

Below is an example of a multi-cloud option for the General Journal regulative reporting services for a UK bank that leverages Databricks and follows the above plan. This method enables the bank to satisfy their stressed-exit requirements while preserving a single code base that is portable in between the clouds:

Conclusion

Monetary companies want to handle cloud supplier threat much better, and in most cases, are needed to follow regulative requirements to perform on a stressed-exit technique or handle data-locality requirements that differ by area. The mix of existing and developing regulative requirements and handling the threat of supplier prices designs altering methods that cloud mobility for a hybrid cloud technique is now a crucial product on the CIO and CTO’s program.

Databricks’ open-standards Lakehouse platform streamlines multi-cloud and hybrid cloud information architectures. Databricks enables companies to establish and handle a single application that will keep up the exact same performance and enhanced efficiency throughout all 3 of the significant cloud suppliers (Azure, AWS and GCP). Databrick’s core software application stack is based upon open-source code which enables hybrid operations for a single application which has on-premises (self-hosted) implementations along with cloud supplier implementations.

The mix of application and information mobility in addition to Databricks’ market leading price/performance enables business to lower operating expense, streamline their architecture and satisfy their regulative requirements.

To streamline protected release of Lakehouse with incorporated market finest practices, merged governance and style patterns, you can likewise start with Market Plans