The climate changed and everyone quickly noticed how expensive Snowflake is.

How Snowflake fails – Benn Stancil

Why is Snowflake so expensive – Stas Sajin

Snowflake performance challenges – Slim Baltagi

Ok, so Snowflake is expensive. But what do I do about it?

- Avoid frequent updates

- Optimize for cost-per-query with apps running 24×7

- Tune slow queries

- Reduce auto-suspend to 1 or 2 minutes

- Build Snowflake chargeback dashboards

- Try third-party cost analyzers

- Set resource monitors and spend thresholds

Let me dig into each of these a bit more.

Modern databases like DynamoDB and MongoDB offer CDC streams.

Your stakeholders ask for fresher data.

You decide to update your warehouse more frequently and you run out of Snowflake credits in a week.

Snowflake is built for batch. It does expensive MERGE operations during ingestion, and CDC streams are likely to burn your compute credits in a week. If you ever see Kafka events or Snowpipe streaming into your warehouse, just mutter son of a batch and walk away.

Warehouses like Snowflake, Redshift, Bigquery are optimzed for long-running scan intensive queries over historical data (e.g. “what was our average selling price in France this year compared to last year?”). By design, they offer low cost-per-GB stored, but do expensive scan operations for every query. Having developers build high QPS data apps on them is highly inefficient (and slow and frustrating).

Real-time analytics platforms like Rockset, Druid, and Pinot are optimized for streaming ingest and the types of selective query patterns that data apps need, making this breed of databases the better choice for powering user-facing analytics. Queries are faster, and more efficient because they use indexes instead of brute-force scans. Both query latency and cost-per-query are lower.

For certain workloads you must optimize for cost-per-query not cost-per-GB. Use a warehouse like Snowflake for BI workloads with infrequent queries, and a real-time analytics database like Rockset for data apps that run 24×7. Using the right tool for the job typically means faster queries at lower compute cost.

“What do I do when my Snowflake query is slow? I kill the query and bump up the compute”

Apart from the better known performance tuning tricks like Data Clustering and Materialized Views, Snowflake has a fair number of slow query optimizations like reducing queueing, using result caching, tackling disk spilling, rectifying row explosions, fixing inadequate pruning.

Here are some useful tips: how to optimize slow queries

Run this Snowflake SQL query to find the most expensive queries from query history in last 30 days, and tune the more frequent ones.

5 minutes is a long time when you’re sitting still. And it’s a really really long time when you’re burning compute.

Spinning up a new virtual warehouse is fast. By default Snowflake sets auto-suspend to 5 minutes, but it’s easy to change it to 1 or 2 minutes.

“I use a Snowflake Large. How much does it cost me? I have no clue” (from actual Snowflake user)

“My CFO is asking me for invoices. I found the credits but still not sure how much I’m spending” (from Snowflake forums)

Here are some helpful tips: how to build these chargeback dashboards

By default only ACCOUNTADMIN role can view billing. First, grant all your users Monitor Usage privileges. Next, build an overall credit consumption dashboard with actual mapping of credits to dollars. And build credit consumption and query execution dashboards by warehouse. Share monthly reports with all users.

Is that the best I can do? This is a question that haunts the best of us.

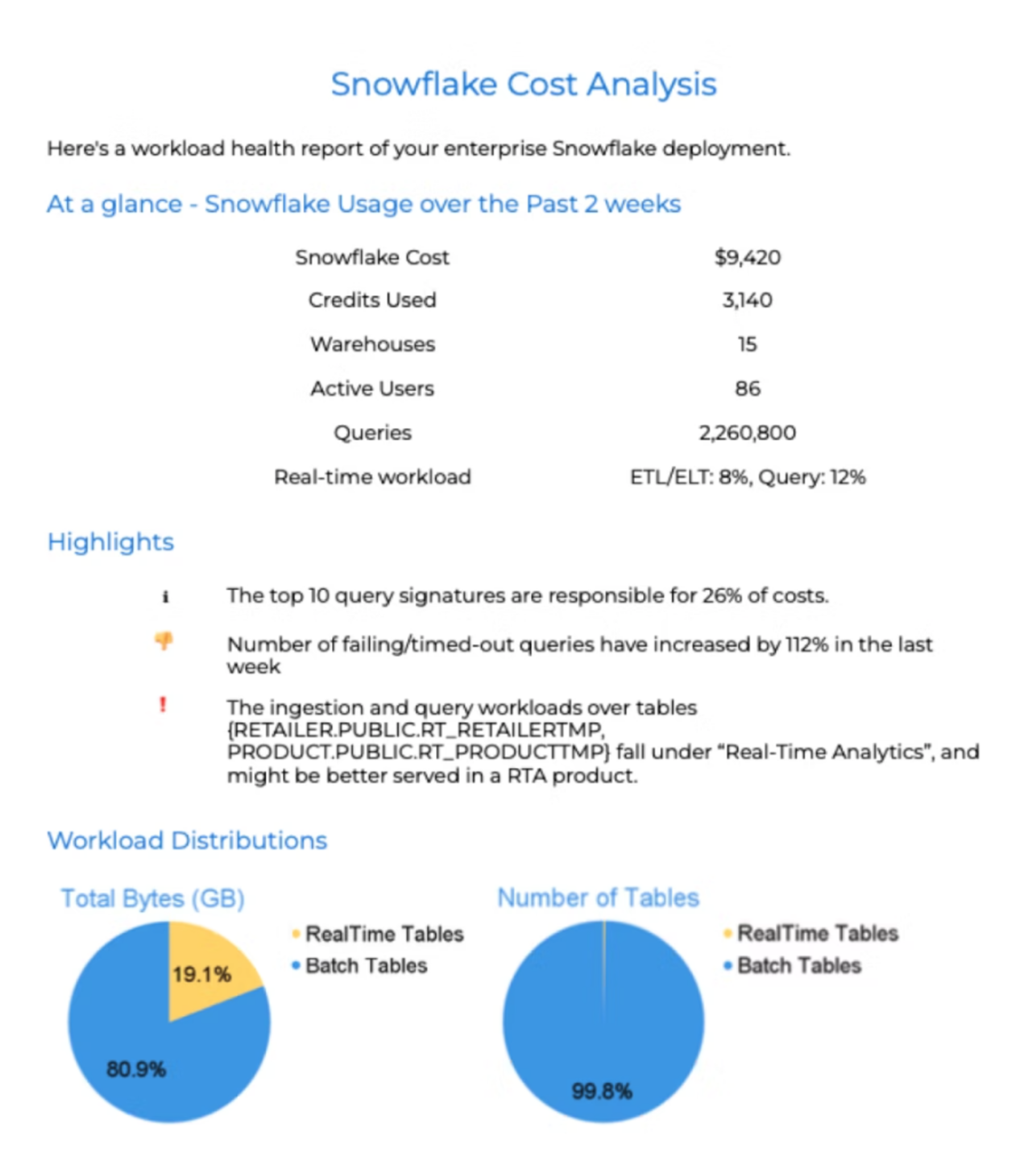

Here is a useful Snowflake Workload Optimization utility powered by Bluesky

Use third party cost analyzers which have intelligent monitoring, provide good industry benchmarks and offer step by step recommendations.

There are no sure shot ways to get rich. But some things are a slippery slope. Don’t be that guy (or gal).

Set hard limits on spend and setup notifications and alerts. When your warehouse reaches 50% of its spend threshold, inspect your ingest and query patterns and do the right thing.